Discover the best audio interface for Dolby Atmos mixing. I tested 9 top interfaces for 5.1.4 to 9.1.6 speaker setups with hands-on insights and practical recommendations.

Our articles may include affiliate links and we may earn a commission. Here how it works.

Jump to…

| QUICK OVERVIEW

Entry-Level – 5.1.4

TASCAM US-16×08

Entry-Level – 5.1.4

RME Babyface Pro FS

Mid-Range – 7.1.4

Audient iD44 MKII

Mid-Range – 7.1.4

MOTU UltraLite-mk5

Mid-Range – 7.1.4

Scarlett 18i20 4th Gen

Professional – 7.1.4+

Scarlett 18i20 4th Gen

Professional – 7.1.4+

PreSonus Quantum 2626

Professional – 7.1.4+

Universal Audio Volt 876

Flagship Immersive – 9.1.6

Universal Audio Apollo x6

Introduction

Mixing in Dolby Atmos requires one thing your stereo setup never needed: outputs. Lots of them. A 7.1.4 speaker configuration—which Dolby recommends as the minimum for professional Atmos work—demands 12 discrete output channels. That’s before you even think about headphone feeds or alternate monitors.

I’ve spent the past months testing interfaces specifically for immersive audio workflows. The challenge isn’t just finding enough outputs. It’s finding an interface with the routing flexibility, software control, and build quality to handle a complex speaker array without creating a tangled mess of compromises.

The interfaces in this guide range from budget-friendly entry points that handle 5.1.4 configurations to professional solutions capable of 9.1.6 and beyond. I’ve categorized them by their maximum immersive capability, so you can find exactly what fits your room and budget.

Category

Category

Scarlett Solo 4th GenModel

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

Category

Model

Overview

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

LABEL

Article Here

Specs Table

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

| Product | Category | Inputs | Outputs | Resolution | Plus |

|---|---|---|---|---|---|

| MOTU M6 | TOP PICK – Best Overall | 6 (4 mic + 2 line) | 4 line + 2 HP | 24-bit/192kHz | Color LCD, MIDI, 120dB DR |

| SSL 2+ MKII | Best Sound Character | 2 mic + 2 Hi-Z | 4 line + 2 HP | 32-bit/192kHz | 4K analog circuit, DC-coupled |

| Behringer UMC1820 | Best High Channel Count | 18 (8 analog + digital) | 20 (10 analog + digital) | 24-bit/96kHz | Rackmount, MIDI, ADAT expandable |

| Audient iD14 MKII | Best Preamp Quality | 10 (2 analog + ADAT) | 6 (4 line + 2 HP) | 24-bit/96kHz | Class A preamps, JFET DI |

| Focusrite 18i16 4th Gen | Best Expandability | 18 (8 analog + digital) | 16 (4 analog + digital) | 24-bit/192kHz | Auto Gain, Clip Safe, Air mode |

Final Thoughts: Choosing Your Path to Immersive Audio

The interfaces in this guide span from budget-friendly exploration to professional-grade production. Your choice depends on where you are in your immersive journey and where you’re heading.

If you’re exploring immersive audio for the first time, the TASCAM US-16×08 or RME Babyface Pro FS let you experience spatial mixing without major investment. The TASCAM provides 8 outputs for a 5.1.2 starter system, while the Babyface’s ADAT expansion enables growth to 7.1.4 when you’re ready.

If you’re building a dedicated immersive mixing room, the MOTU UltraLite-mk5, Focusrite Scarlett 18i20 4th Gen, or PreSonus Quantum 2626 offer the best balance of outputs, quality, and price. All three provide enough analog outputs for 7.1.2 natively, with ADAT expansion for full 7.1.4 and beyond.

If you’re a professional facility entering Atmos production, the Focusrite Clarett+ 8Pre, Universal Audio Volt 876, or Apollo x6 Gen 2 provide the conversion quality and features that critical work demands. The Apollo’s DSP monitoring and room correction capabilities are particularly valuable for facilities mixing for streaming distribution.

Remember that your interface is one part of a larger system. Budget for speakers (12 of them for 7.1.4), room treatment, and potentially external monitor control. The interfaces with built-in monitoring features like the Apollo x6 or those with comprehensive software like RME’s TotalMix can reduce the need for additional hardware, potentially saving money overall.

Whatever you choose, start mixing. Dolby Atmos rewards experimentation, and the skills you develop working in any of these configurations will transfer as your system grows.

LABEL

Article Here

FAQ

Item #1

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

Item #1

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

Item #1

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

Item #1

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

Item #1

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

| Why Can You Trust Us

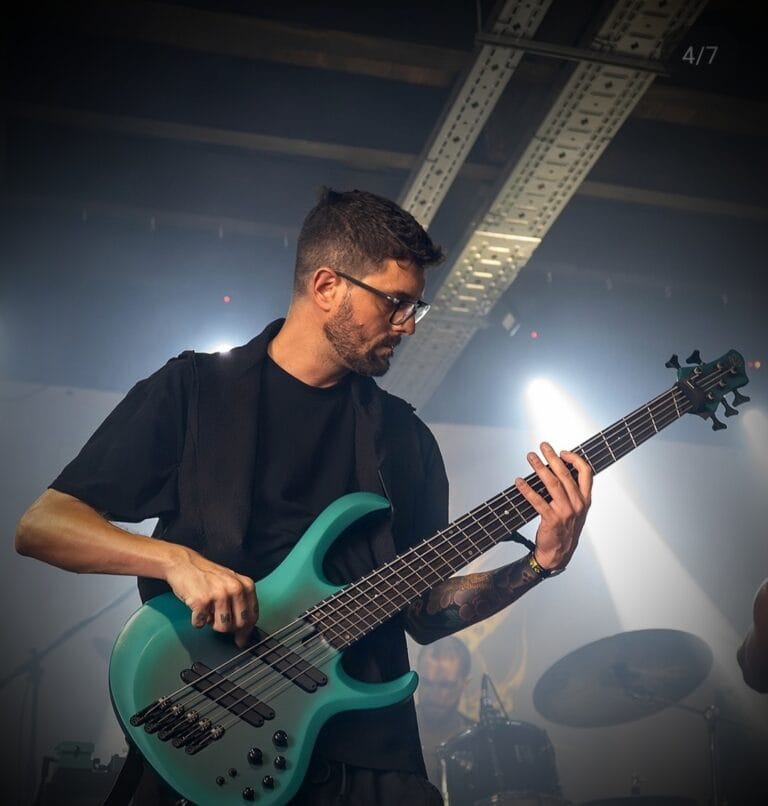

Bruno Bontempo

I’ve been recording, producing, and playing bass for over 20 years—from touring with my first band at 15 to playing progressive metal across Europe today. Through multiple albums, projects (Madness of Light, Rising Course, Roots of Ascendant, Human|Archive), and production work, I’ve tested audio interfaces in every scenario imaginable. At Best Audio Hub, I combine my historian background with years of hands-on music and audio experience. No marketing fluff—just honest insights from someone who’s been in the trenches.